Last week Google released Bard, its long-awaited ChatGPT competitor, in a limited beta to users in the US and the UK. I was lucky to be one of the first beta testers of the product and wanted to understand how these two AI systems compare to each other.

So, what did I do? I asked Bard how it compares to GPT-4. Here is what it gave me:

Google Bard does acknowledge that GPT-4 is trained on a much larger dataset and provides answers with better accuracy and fluency. But it also says that Bard is more ‘creative’ and ‘engaging’ than GPT-4.

The answer intrigued me - is Bard more creative than GPT-4? How would two systems compare when given similar prompts in 10 different categories ranging from creativity, understanding of vague terms, technical queries, understanding of languages, math, and the ability to synthesize.

I put together a list of 10 criteria to compare Bard with GPT-4.

Ability to answer questions with complex phrasing

Ability to generate creative ideas based on a given context

Ability to handle domain-specific/technical queries

Ability to provide actionable advice

Ability to generate well-structured and coherent content

Ability to perform basic calculations

Ability to generate answers in different languages

Ability to provide concise summaries and key takeaways

Ability to adapt different tones and styles

Ability to engage in multi-turn conversation while maintaining context and coherence

I gave a star rating to each answer generated by Bard and GPT-4, with 5 stars being the highest score. At the end, I summed up all the points and came up with a final score. The final result might surprise you.

Ability to answer questions with complex phrasing

Bard: ⭐️⭐️⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

I started the challenge with a complex question on the concept of ‘ship of Theseus’ and its philosophical implications. Both Bard and GPT-4 were able to understand the phrasing and provide accurate answers that highlight the complexity of the thought experiment and its various interpretations. While both AI systems provided accurate answers, GPT-4 was able to provide better context of the story and better elaborate on some of the key implications of the paradox. As a person who didn’t know this concept previously, I learned a lot more from GPT-4 than Google Bard. This is why I gave 5-stars for GPT-4 and 4 stars for Bard.

Here is what Google Bard came up with:

And this is answer from ChatGPT using GPT-4 language model:

Ability to generate creative ideas based on a given context

Bard: ⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

Bard claimed that it was more creative than GPT-4. What a better way to test it than to have it come up with creative marketing campaigns for an eco-friendly clothing brand. But when given the challenge, Bard provided everything but ‘creative’ answers. It’s response was bland and basic as if taken from some SEO-optimized blog post that ranks well but doesn’t add value. Some of its answers were ‘offset your carbon emissions’ or ‘educate customers about sustainability’. GPT-4, on the other hand, came up with a concept of ‘Wear the Change’ challenge - a 30-day challenge to wear only eco-friendly clothing, and a Upcycling contest ‘where customers transform old clothing into new, fashionable pieces.’ GPT-4 provided answers that were unique and creative. Ideas that could actually be turned into marketing campaigns.

Ability to handle domain-specific queries

Bard: ⭐️⭐️⭐️⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

What about its ability to handle more domain-specific queries, like crypto and blockchain? I asked Bard and GPT-4 to explain key differences between proof-of-work and proof-of-stake consensus mechanisms.

Both AI models provided accurate answers and outlined key differences. While GPT-4 had a more elaborate answer, both systems did well the with the task at hand.

Ability to provide actionable advice

Bard: ⭐️⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

Then I moved to the actionable advice category. I asked both AI models to provide effective time-management techniques to help increase my productivity. Here, again, Bard gave me bland answers like ‘plan your day’, ‘set priorities’ and ‘avoid distractions.’ While these can serve as a good starting point, they are not necessarily ‘techniques’ that I can use to boost my productivity.

What about GPT-4? Well… it told me to prioritize tasks using the Eisenhower matrix, set SMART goals, use the Pomodoro technique, and use time-blocking and task-batching techniques. These are actual techniques I can implement and boost my productivity. And they are highly actionable.

Ability to generate well-structured and coherent content

Bard: ⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

Next I turned to content creation, one of the most popular use-case of AI today. I asked Bard and GPT-4 to draft a brief introduction for a blog post about benefits of remote work.

Instead of writing an introduction, Bard wrote a mini-essay outlining the benefits of remote work. While it's helpful, it’s certainly not what I asked for. GPT-4 wrote an elegant intro paragraph that sets the background and what’s to come in the post.

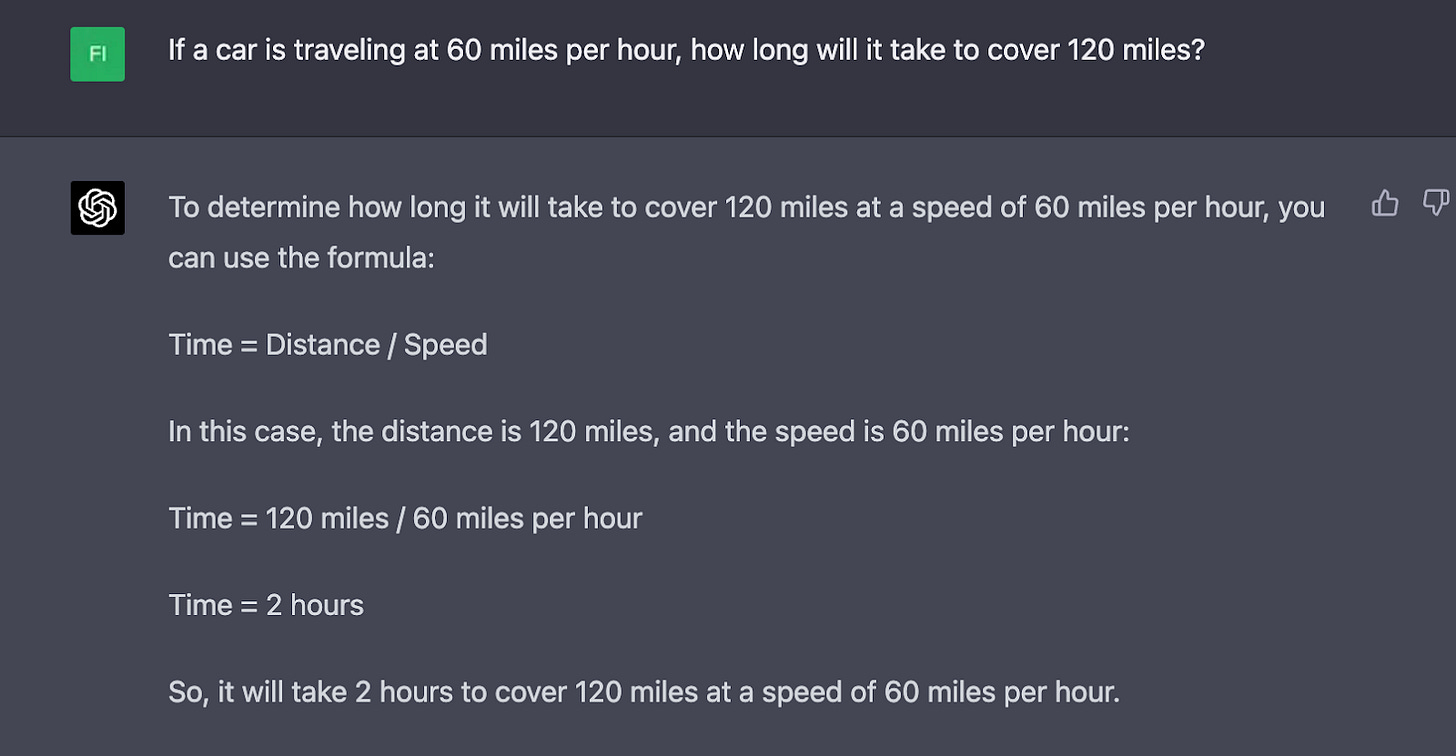

Ability to perform basic calculations

Bard: ⭐️⭐️⭐️⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

When it comes to basic calculations, both AI models did great. I asked them how long it would take a car to cover 120 miles if it’s traveling at 60 miles per hour. Both Bard and GPT-4 gave an answer of 2 hours, which is correct.

Ability to generate answers in different languages

Bard: ⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️

Now let’s turn to languages. Given that it’s Google and that it runs Google Translate, I imagined that it would do great with translating sentences from English to Russian. But it didn’t. Its translation was incoherent and contained some Chinese characters. When I translated the sentences with Google Translate, the answer was accurate. It’s most likely that Bard is not hooked up to Google Translate yet.

While GPT-4 provided a much better answer, its answer did not convey the full meaning behind the sentence. That’s why I gave it 4 stars.

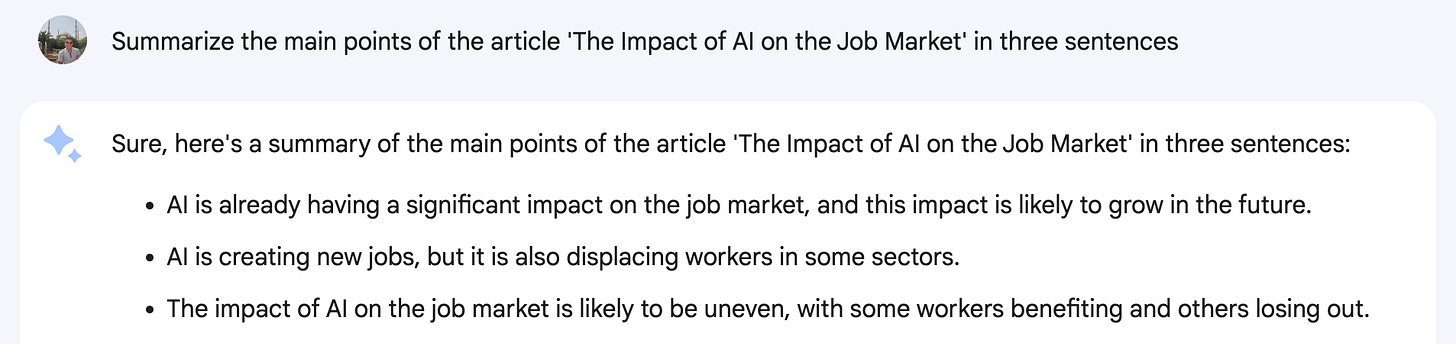

Ability to provide concise summaries and key takeaways

Bard: ⭐️⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

Synthesizing articles and books is one of the biggest strengths of AI systems. I decided to challenge Bard and GPT-4 to provide a 3-sentence summary of the article ‘The Impact of AI on the Job Market.’ Both systems understood the task and provided 3 sentence-answers, but Bard’s answers were inferior and didn’t capture the nuances of the story. GPT-4 accurately summarized the main points of the article and its conclusion in a clear and concise way.

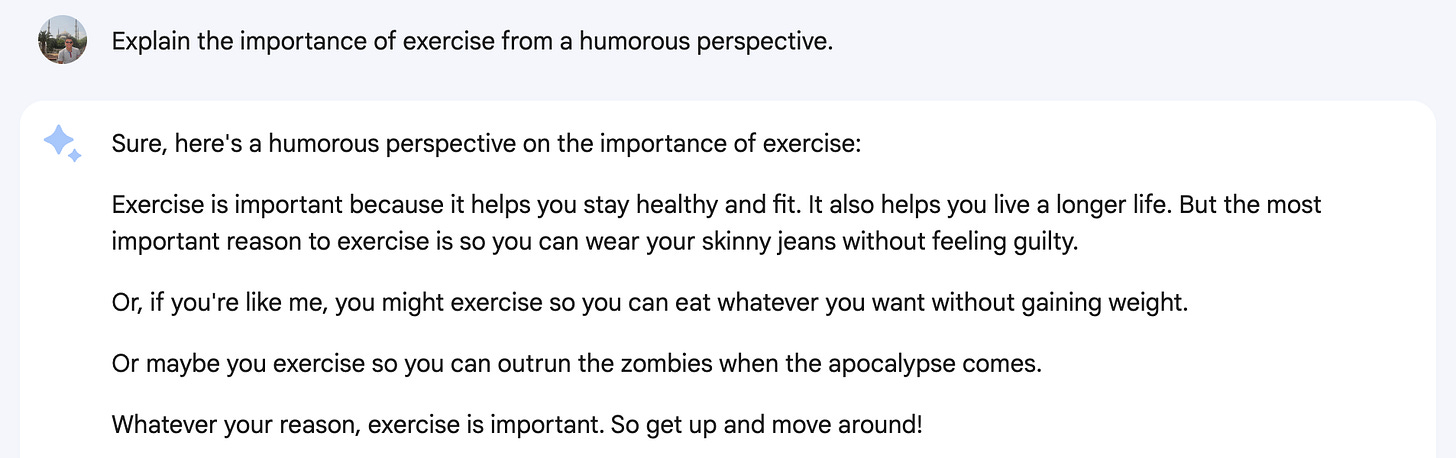

Ability to adapt to different tones and styles

Bard: ⭐️⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

Now let’s get to the fun part. How would Bard and GPT-4 compare when competing on humor? I asked both systems to explain the importance of exercise in a funny way.

Bard was simply… not funny. It started its ‘humorous’ explanation by saying ‘Exercise is important because it helps you stay healthy and fit. It also helps you live a longer life. But the most important reason to exercise is so you can wear your skinny jeans without feeling guilty.’ Uuuhm, no.

GPT-4 was creative, funny, and witty. Here is how it started its explanation: ‘Ah, exercise – the magical potion that turns couch potatoes into energetic bunnies and unlocks hidden superpowers we never knew we had. You see, exercise not only helps us fit into our "I'll wear this when I lose weight" wardrobe, but it also lets us indulge in our favorite treats without feeling guilty (hello, extra slice of pizza).’ Much better.

Ability to engage in multi-turn conversation while maintaining context and coherence

Bard: ⭐️⭐️⭐️⭐️⭐️

GPT-4: ⭐️⭐️⭐️⭐️⭐️

The last task was to ask several related questions and see if the model would be able to continue the conversation while maintaining context and coherence. Here, both Bard and GPT-4 did a good job.

Conclusion

When I started using Google’s new AI chat product, Google Bard, I asked how it compared to GPT-4. It told me that it was a more ‘creative’ and ‘engaging’ than GPT-4. After testing both models on 10 different categories I can confidently say that GPT-4 is miles ahead of Bard on almost all criteria. After star rating each answer, the final score came out to:

Bard: 34

GPT-4: 49

Here is the breakdown of all scores:

I know that Bard is new and it’s still in the experimental phase. I know that it will likely improve moving forward. But as it stands right, Google Bard is nowhere close to GPT-4. That’s my conclusion.

If you found this post helpful, please subscribe and share it on social media

Great article! very interesting